This blog kicks off a series of 4 blog articles. They will all be available here, as soon as they get published.

- Run: Get your Angular, Python or Java Dockerfile ready with Red Hat’s Universal Base Image and deploy with Helm on OpenShift

- Develop: The Inner Loop with OpenShift Dev Spaces

- Build: From upstream Tekton and ArgoCD to OpenShift Pipelines and GitOps

- Deliver: Publish your own Operator with Operator Lifecycle Manager and the OpenShift Operator Catalog

Introduction

Red Hat OpenShift is one of the most sought after Enterprise Kubernetes Platforms, is 100 % Open Source and fully builds on Kubernetes. But it is much more than Kubernetes! It integrates hundreds of upstream Open Source projects, enforces security for the enterprise and does not only support the run and operate, but also the develop and build phases, hence, the entire software lifecycle.

To deliver on its promise of Security and Integration of the different Open Source upstream projects – which are not only used, but also strongly backed by Red Hat – you might need to make some tweaks to an application that you are right now operating with docker, docker-compose or a Kubernetes service from one of the hyperscalers.

Coincidentally, we built an exemplary application called Local News in line with the book Kubernetes Native Development which we developed and tested on minikube and cloud-based Kubernetes Services such as EKS – but not on OpenShift! And we didn’t just write code and put it into containers, but we also used several Open Source projects to develop directly on and with Kubernetes, to enhance the application, and to provide CI/CD pipelines and GitOps. We even built a Kubernetes Operator to make sharing, installation and day-2 operations for this application simple. Some of the Open Source projects used are already integrated into OpenShift, some are not.

In this series of Blog articles, we will showcase the journey of this application to OpenShift and how we leveraged the many additional capabilities that OpenShift brings to us!

Let’s get started with the first one.

Run: Get your Angular, Python or Java Dockerfile ready with Red Hat’s Universal Base Image

Background: The Local News Application

As already introduced, the Local News application is used as a sample in our book Kubernetes Native Development. It serves to demonstrate a wealth of concepts to use Kubernetes along the software lifecycle.

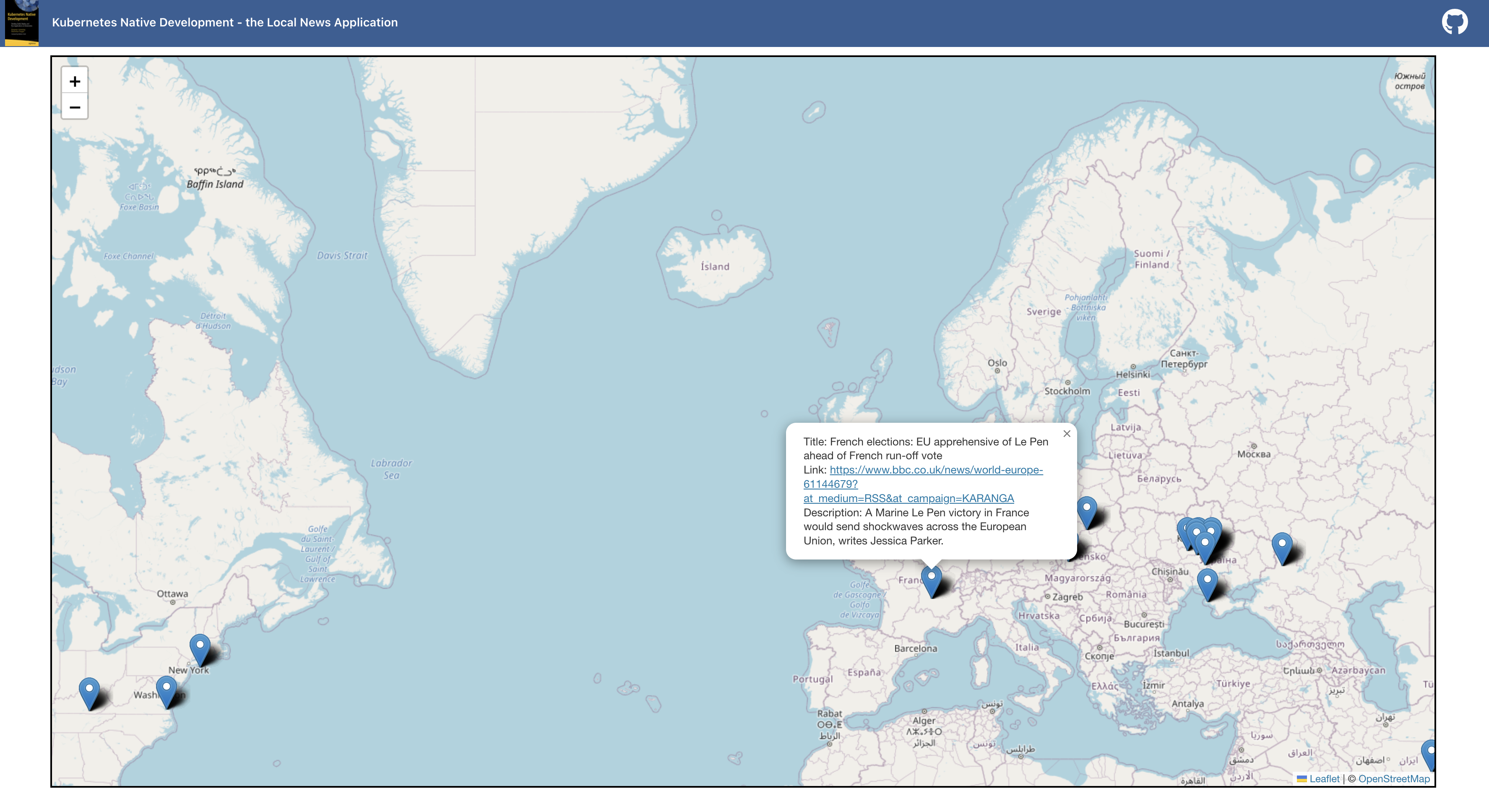

As the name implies, it is about displaying news, but with a reference to a location on a map. The location where the respective news is placed on the map depends on the location mentioned in the news’ text. An example would be a news article with the following title: “Next Sunday, there will be the Museum Embankment Festival in Frankfurt”. The Local News application will analyze the text based on Natural Language Processing (NLP), will find out that the news refers to Frankfurt, and will place it into the city of Frankfurt on the map. Figure 2-1 shows a screenshot of the user interface for the local news application. The pins on the map represent the respective news. When the user clicks on it, it will display the details about the news.

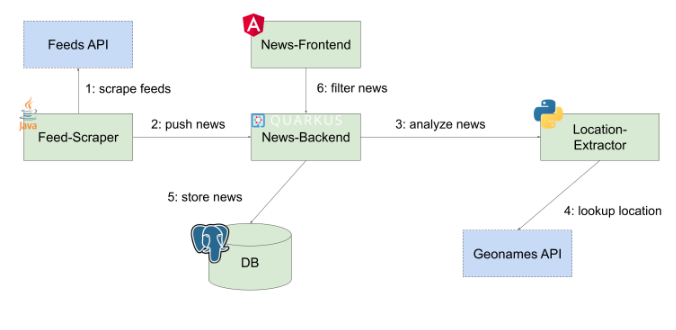

The above image provides a brief overview of the components. First of all, there is a Feed-Scraper which is regularly polling a set of given news feeds and which will send the news data such as title and description to the News-Backend. The News-Backend first stores the news data in a database and then sends a request to the Location-Extractor in order to analyze the text, to extract the name of the location (only the first location that is found is considered), and to return it to the backend. The response will contain the coordinates in terms of longitude and latitude of the location, which will then be added to original news data and updated in the database. If a user wants to see the map with the news, he or she can use the News-Frontend. This web application renders the map with the news by requesting the news (in the bounds defined by the map’s current clipping) from the News-Backend. The backend queries its database and returns the news with the stored location. The front end marks the map with the news’ location and when the user hovers over the markers, he or she will see the news text.

GitHub

https://github.com/Apress/Kubernetes-Native-Development

Container Registry

https://quay.io/organization/k8snativedev

Trying it out

The easiest way is to deploy it with Helm and access the frontend via NodePort.

But wait a minute – will it work like that if your kubeconfig is pointing to an OpenShift cluster? As indicated above, we might need to make some tweaks! Now, let’s start with the building blocks of our application – the container images and the tuning of our Helm Chart.

The Least Privilege Principle

The OpenShift documentation says:

To further protect RHCOS systems in OpenShift Container Platform clusters, most containers, except those managing or monitoring the host system itself, should run as a non-root user. Dropping the privilege level or creating containers with the least amount of privileges possible is recommended best practice for protecting your own OpenShift Container Platform clusters.

So one may ask why this is necessary? Actually, wrong question! Because if something does not need root access, it shouldn’t have it – to reduce the attack surface and make it less prone to potential threats.

Let’s see if we took care of it in our sample application. There is a Helm Chart ready to deploy the Local News application, but it has not been tested on OpenShift yet.

git clone https://github.com/Apress/Kubernetes-Native-Development

oc login

oc new-project localnews

helm install localnews k8s/helm-chartCode language: PHP (php)That should deliver the result in the below image.

Two components of our application are in a degraded state. Let’s have a look at it with the following command.

oc get podsCode language: JavaScript (javascript)Two of our Pods are in “CrashLoopBackOff” state and if we look into the logs of these Pods we see a permission denied error. This looks suspiciously like something related to root privileges.

Let’s investigate and fix it by having a look at the Dockerfile.

Adapting the Dockerfiles & Helm

News-Frontend component: Angular with NGINX

If you want to follow along on your own system, switch to the "openshift" branch of the Git repo.

git checkout openshift

In the Helm Chart we see that the image quay.io/k8snativedev/news-frontend:latest causes the trouble. For ease of use we included a Dockerfile with a multi-stage build process, to make it easier to follow along. In a later part, when we’ll show how to move from upstream Tekton and ArgoCD to OpenShift, those two stages will be done in two distinct steps in our Delivery Pipeline.

The multi-stage Dockerfile below contains two stages. Stage 1 serves to build the application with ng and makes the resulting artifact available to the next stage.

If we look at Stage 2 of the Dockerfile we see that the Image runs a standard nginx from docker.io, exposes port 80 – the standard for nginx – and finally starts nginx. In the CMD instruction of the Dockerfile in line 14 we can see that a settings.template.json file replaces the standard "settings.json". This allows for setting environment variables at each startup of the container, which is something Angular, unfortunately, does not provide out-of-the-box. There is also some code in the application to actually inject the variables passed in by the settings.json file.

# Stage 1: build the app

FROM registry.access.redhat.com/ubi7/nodejs-14 AS builder

WORKDIR /opt/app-root/src

COPY package.json /opt/app-root/src

RUN npm install

COPY . /opt/app-root/src

RUN ng build --configuration production

# Stage 2: serve it with nginx

FROM nginx AS deploy

LABEL maintainer="Max Dargatz"

COPY --from=builder /nas/content/live/open011prod/dist/news-frontend /usr/share/nginx/html

EXPOSE 80

CMD ["/bin/sh", "-c", "envsubst /usr/share/nginx/html/assets/settings.json && exec nginx -g 'daemon off;'"]

While Stage 1, the build stage, already works with a Red Hat Universal Base Image (UBI) that per default runs with a non-root user, Stage 2, the serving stage, has a few security issues that made it crash with the OpenShift default settings as we saw earlier. Basically there are three issues:

-

Root User

Nginx from DockerHub runs per default with a root user – the default OpenShift SCC (Security Context Constraint) prevents Containers from running with root privileges.

-

Priviledged Ports

Priviledged ports, in the range of 80 – 1024, are only available to the root user. But the root user is not available. -

Specifically grant access to directories

Below we see the logs of the news-frontend container, that is stuck in a CrashLoopBackOff state because a the aforementioned settings.json file cannot be accessed.

/bin/sh: 1: cannot create /usr/share/nginx/html/assets/settings.json: Permission denied

This behaviour needs some more explanation because it is not just that OpenShift doesn’t allow to start Containers with root permission. In OpenShift each Namespace/Project has a pool of user IDs to draw from. It is part of an annotation each OpenShift Project has and looks something like openshift.io/sa.scc.uid-range=1000570000/10000.

So the Container starts with one of these UID’s, overriding whatever UID the image itself may specify. The problem here is that this "arbitrary" user will not have access to /usr/share/nginx/html/assets/. But as you can see in our CMD instruction we substitute the values of some environment variables.

Now this is exactly the reason why two of our components failed to start. They want to start as root, but get assigned a different UID and, hence, are not able to run, because root was expected.

Now what are options to make our Container more secure and run on a fully supported and vetted stack?

- Run NGINX unpriviledged

- Rewrite the nginx.conf to listen on an unpriviledged port

- Grant our "arbitrary" user access to the assets folder

Solving issue 1 & 2

While it seems like we need a customized nginx base image and have to bother with the config files – actually, we don’t. We will just use the Universal Base Image (UBI) from Red Hat that runs an unpriviledged nginx, listening on port 8080. This is a certified and, running on OpenShift, fully supported base image you can find here. So we change the base image for Stage 2 to the one depicted below.

# Stage 2: serve it with nginx

FROM registry.access.redhat.com/ubi8/nginx-120 AS deploy

Solving issue 3

If you review the Dockerfile of the UBI we use as the base image you see that it uses User 1001. So it is tempting to give the permissions to /nas/content/live/open011prod/assets to User 1001. But, remember, even though 1001 is not the root user, OpenShift is overwriting this UID. Therefore, we have to take a different approach.

The OpenShift documentation provides a solution:

For an image to support running as an arbitrary user, directories and files that are written to by processes in the image must be owned by the root group and be read/writable by that group. Files to be executed must also have group execute permissions.

Adding the following to our Dockerfile sets the directory and file permissions to allow users in the root group to access them in the built image:

RUN chgrp -R 0 /nas/content/live/open011prod/assets && \

chmod -R g=u /nas/content/live/open011prod/assets

Because the container user is always a member of the root group, the container user can read and write these files. The root group does not have any special permissions (unlike the root user) so there are no security concerns with this arrangement.

After incorporating the three changes we end up with the following Dockerfile, building on the secure and supported foundation of the UBI.

# Stage 1: build the app

FROM registry.access.redhat.com/ubi8/nodejs-14 AS builder

WORKDIR /opt/app-root/src

COPY package.json /opt/app-root/src

RUN npm install

COPY . /opt/app-root/src

RUN ng build --configuration production

# Stage 2: serve it with nginx

FROM registry.access.redhat.com/ubi8/nginx-120 AS deploy

WORKDIR /opt/app-root/src

LABEL maintainer="Max Dargatz"

COPY --from=builder /nas/content/live/open011prod/dist/news-frontend .

## customize for file permissions for rootless

RUN chgrp -R 0 /nas/content/live/open011prod/assets && \

chmod -R g=u /nas/content/live/open011prod/assets

USER 1001

EXPOSE 8080

CMD ["/bin/sh", "-c", "envsubst /nas/content/live/open011prod/assets/settings.json && exec nginx -g 'daemon off;'"]

Location Extractor: Python

For the Location Extractor component, that serves to extract locations and get coordinates for them, we will not go into same depth. But we have to fix it. In our original approach the Python App was started with an Image from Docker Hub (Official Python Image) with supervisord running nginx and a wsgi server. The issues are actually pretty similar to those described in the previous part.

One possible solution is again to do a multi-stage build. Now you might say – wait a minute – Python is an interpreted language. Why is it necessary? It actually isn’t a requirement but still seems reasonable to separate the installation of the dependencies and of a machine learning model from the Open Source library spaCy (see line 7) from Stage 2, where the application is started with the WSGI server Gunicorn. And again, in both stages, we build on a fully supported UBI with Python 3.9 installed.

# Stage 1: package the app and dependencies in a venv

FROM registry.access.redhat.com/ubi8/python-39 as build

WORKDIR /opt/app-root/src

RUN python -m venv /nas/content/live/open011prod/venv

ENV PATH="/nas/content/live/open011prod/venv/bin:$PATH"

COPY requirements.txt .

RUN pip3 install -r requirements.txt && python3 -m spacy download en_core_web_md

# Stage 2: start the application with gunicorn

FROM registry.access.redhat.com/ubi8/python-39

WORKDIR /opt/app-root/src

COPY --from=build /nas/content/live/open011prod/venv ./venv

COPY ./src /nas/content/live/open011prod/src

ENV PATH="/nas/content/live/open011prod/venv/bin:$PATH"

USER 1001

CMD ["gunicorn", "--bind", "0.0.0.0:5000", "src.wsgi:app" ]

The other components: Quarkus & Java

The other components were already working without any adjustments. Still, if we have a look at the Git Repo and the Dockerfiles for the news-backend component, a Quarkus application, and the feed-scraper component, a Java EE application, we discover that we can also leverage the UBI here e.g. by using openjdk-11 or the openjdk11-runtime.

If we apply all the changes above to our images and configure our Helm Chart accordingly, we run on a Red Hat certified stack with all our application components.

Configuring the Helm Chart

In the Git Repo we provide a new values File to reflect the necessary changes we have made to the Container Images of the news-frontend and the location-extractor component. We rebuilt the Images and tagged them with openshift and reflected the changes in values-openshift.yaml.

Another thing we have to think about is how to expose the application. And if you are familiar with Kubernetes but not OpenShift you’ll be familiar with the Kubernetes Ingress. But OpenShift exposes apps to the public via OpenShift Routes.

The nice thing about OpenShift Routes is that they get created automatically if we provide an Ingress Resource. There are two components we have to expose to the public. One is the news-frontend and the other one the news-backend, because the Angular Web Application is making a connection from the client-side to the news-backend. Both Ingresses are prepared as Helm templates already. The only thing we have to do is to provide our specific cluster ingress subdomain or webdomain when deploying the application and specify our new values-openshift.yaml instead of the standard values.yaml file in the helm command.

helm upgrade -i localnews k8s/helm-chart -f k8s/helm-chart/values-openshift.yaml \

--set newsfrontend.backendConnection="viaIngress" \

--set newsfrontend.envVars.backend.prefix.value="http://" \

--set newsfrontend.envVars.backend.nodePort.value="80" \

--set localnews.domain="mycluster.eu-de.containers.appdomain.cloud"

What we observe is that even though we provided only an Ingress resource, OpenShift automatically creates a route for us, that is then publicly accessible.

Leverage OpenShift

We have seen that with the Red Hat certified Container Catalog, containing the different flavours of the UBI, and OpenShift as a platform we can easily roll-out our application with Helm in a secure and supported way with base images from a vetted source. We can now manage our Helm release via OpenShift and configure our Application with the Developer view.

Outlook

In this article we learned a few things about how OpenShift together with Red Hat’s Universal Base Image helps you to build and run your Apps on a unified, regularly updated and patched basis, and with this much more securely. And, somewhat like the guy in picture below, I was pleased with myself after fixing these issues (yep, I like MMA 🙂 ).

But, unfortunately, ensuring a really advanced level of container security takes a few more steps. For example with Red Hat Advanced Cluster Security (ACS). After scanning my application with ACS, my feelings were much closer to this:

My application urgently needed:

- a rebuild with current versions of the UBI to fix some CVEs,

- resource limits to ensure it makes no noisy neighbour things,

- to run with a non-default service account, because that one mounts the kubeconfig,

- the removal of package managers that were present in a few images, which makes installing malicious tools at least a possibility

- a solution for an anomalous egress from my database

- and a few other less severe things…

You probably won’t require a fix for all the issues right from development all the way to production. For instance, in development you might want to have some advanced debug options in your container images. But, if you really care about container security, do the homework that OpenShift gives you, and, additionally, consult a tool such as ACS to help you run securely in production. Here is a good read and a good view to dive deeper on the topic.

That concludes the first part and it focussed solely on RUNNING our application. Stay tuned for the next 3 parts about developing, then building and finally delivering your application as an Operator with GitOps.

Authors

Benjamin Schmeling

Benjamin Schmeling is a solution architect at Red Hat with more than 15 years of experience in developing, building, and deploying Java-based software. His passion is the design and implementation of cloud-native applications running on Kubernetes-based container platforms.

Maximilian Dargatz

I am Max, live in Germany, Saxony-Anhalt, love the family-things of life but also the techie things, particularly Kubernetes, OpenShift and some Machine Learning.

2 replies on “You’ve written a Kubernetes-native Application? Here is how OpenShift helps you to run, develop, build and deliver it – securely! (1/4)”

[…] Run: Get your Angular, Python, or Java Dockerfile ready with Red Hat’s Universal Base Image and de… […]

[…] Part 1 Run: Get your Angular, Python, or Java Dockerfile ready with Red Hat’s Universal Base Image and de…, we discussed the foundations and prerequisites for deploying a Kubernetes-native application to […]